Personalising Open-Source Local LLM vs. Using Closed-Source LLM (APIs)

Personalising Open-Source Local LLM vs. Using Closed-Source LLM (APIs)

Personalising Open-Source Local LLM vs. Using Closed-Source LLM (APIs)

Personalising Open-Source Local LLM vs. Using Closed-Source LLM (APIs)

Nowadays, Large Language Models (LLMs) have grown as powerful tools capable of generating human-like text, automating tasks, and increasing user experiences. However, a critical decision lies ahead for companies: should they invest in personalising local LLM models through training and fine-tuning, or should they choose the convenience of LLM APIs, which are also known as closed-source models, provided by tech giants such as OpenAI? This article explores the details of both approaches, offering insights to help you make an informed choice that aligns with your company’s objectives and resources.

Key Highlights

What are open-source local LLM models and closed-source LLM APIs, and what is their difference

Personalising open-source local LLM models offers deep customisation and complete control over your AI solutions

LLM APIs provide a convenient solution with minimal setup

Running LLMs locally reduces latency, while LLM APIs ensure reliable and consistent service

Local LLMs offer strong data privacy and can be more cost-effective for large-scale use, whereas LLM APIs... read about that and more in our article

Understanding the Local LLM and LLM API

Regarding LLMs, there are two primary routes to consider: personalising open-source models such as LLama or using ready-made APIs such as OpenAI. Both have distinct advantages and challenges.

Open-source LLM:

Personalising open-source local LLM models involves training and fine-tuning models on your hardware. This approach offers a high degree of customisation, allowing you to tailor the models to meet specific requirements.

LLM API:

LLM APIs offer a convenient and scalable solution for integrating language models into your applications. Tech giants provide these APIs, which require minimal effort to implement.

Nowadays, Large Language Models (LLMs) have grown as powerful tools capable of generating human-like text, automating tasks, and increasing user experiences. However, a critical decision lies ahead for companies: should they invest in personalising local LLM models through training and fine-tuning, or should they choose the convenience of LLM APIs, which are also known as closed-source models, provided by tech giants such as OpenAI? This article explores the details of both approaches, offering insights to help you make an informed choice that aligns with your company’s objectives and resources.

Key Highlights

What are open-source local LLM models and closed-source LLM APIs, and what is their difference

Personalising open-source local LLM models offers deep customisation and complete control over your AI solutions

LLM APIs provide a convenient solution with minimal setup

Running LLMs locally reduces latency, while LLM APIs ensure reliable and consistent service

Local LLMs offer strong data privacy and can be more cost-effective for large-scale use, whereas LLM APIs... read about that and more in our article

Understanding the Local LLM and LLM API

Regarding LLMs, there are two primary routes to consider: personalising open-source models such as LLama or using ready-made APIs such as OpenAI. Both have distinct advantages and challenges.

Open-source LLM:

Personalising open-source local LLM models involves training and fine-tuning models on your hardware. This approach offers a high degree of customisation, allowing you to tailor the models to meet specific requirements.

LLM API:

LLM APIs offer a convenient and scalable solution for integrating language models into your applications. Tech giants provide these APIs, which require minimal effort to implement.

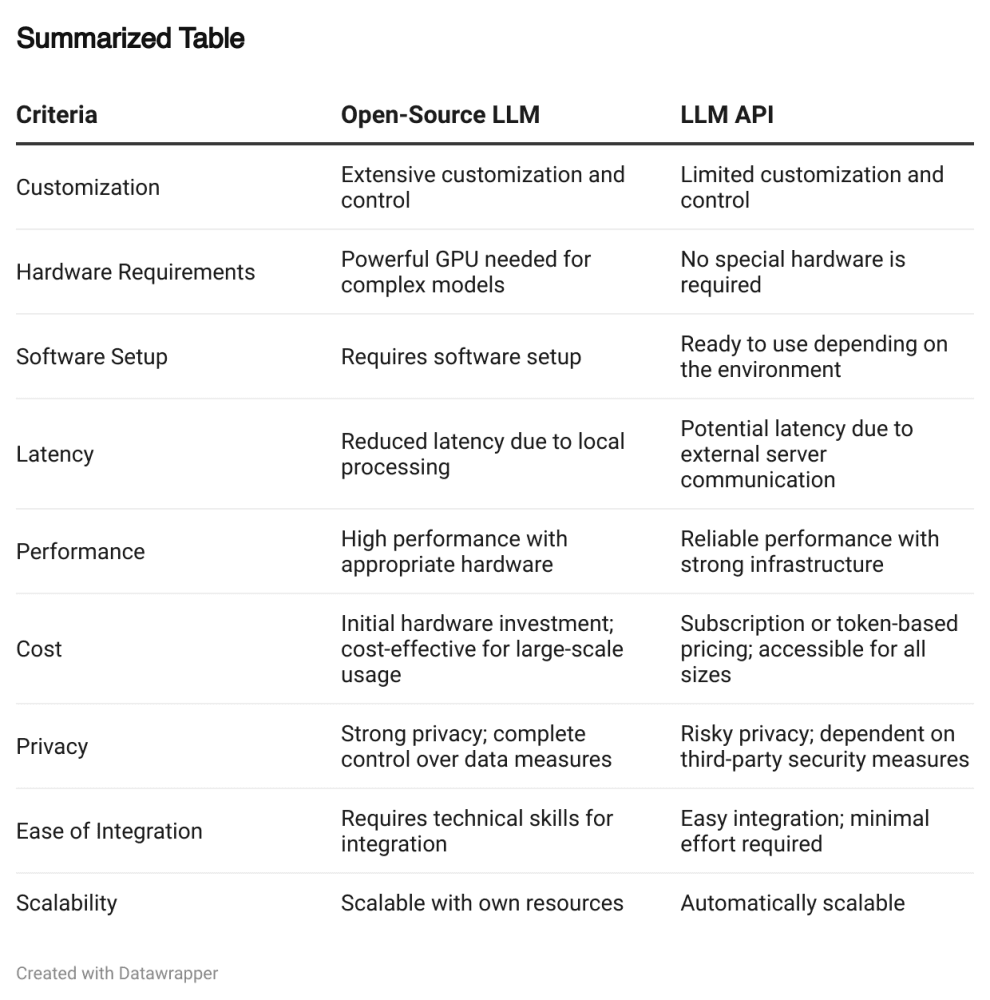

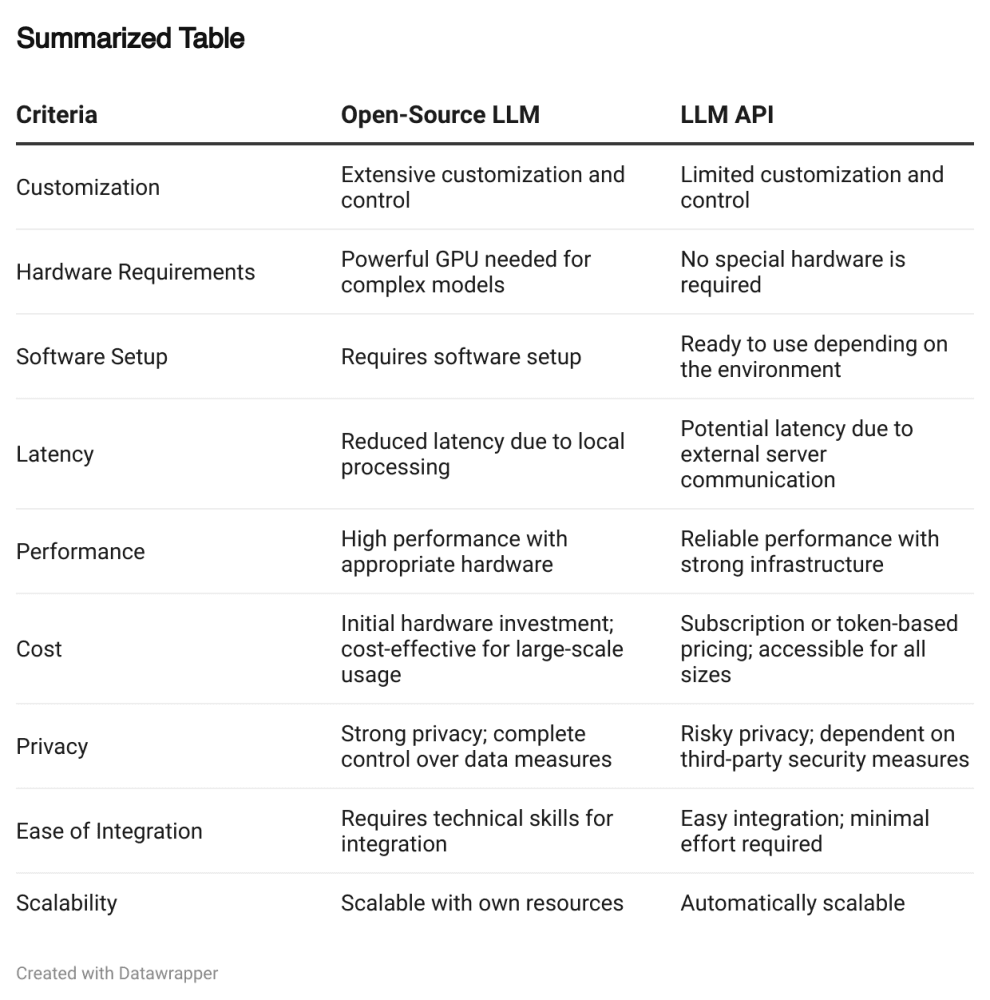

Key Features and Capabilities

1. Technical Aspects:

Open-source LLM:

Hardware Requirements: For complex models, a powerful GPU is essential. Simpler models, however, can run smoothly on a standard desktop or laptop.

Software Setup: Ensure to have Python (as an example), and an API key ready to deploy LLM models on your machine.

LLM API:

Ease of Integration: Developers can integrate these APIs with minimal effort, sending requests and receiving real-time responses. The API provider handles the heavy lifting of model training and infrastructure management.

2. Performance Comparison:

Open-source LLM:

Reduced Latency: A standout benefit is reduced latency. Running the LLM locally eliminates the need to send data to external servers, minimising delays in processing and response times.

LLM API:

Reliable Performance: While some latency may be introduced, these APIs deliver reliable performance. They are designed to handle continuous loads and provide consistent service delivery.

3. Cost Analysis:

Open-source LLM:

Hardware Set up: Operating LLMs locally saves costs and increases performance. However, you might need to update the hardware to provide models with the required power.

LLM API:

Flexible Pricing: Typically offered on a subscription or token-based model, these APIs provide flexible pricing, making them accessible for businesses of all sizes.

4. Privacy Considerations:

Open-source LLM:

Strong privacy: Deploying a local LLM ensures complete control over your data, eliminating the need to send sensitive information to external applications.

LLM API:

Risky Privacy: While privacy can be a concern with third-party providers, reputable companies implement strict security measures to safeguard your data.

Use Cases

Open-source LLM:

Enterprise-Level Projects: Perfect for large organisations that require deep customisation and control over their AI models.

Specialised Applications: Ideal for niche applications where off-the-shelf models might not meet specific requirements. For instance, a healthcare startup developing a unique diagnostic tool based on proprietary medical data.

Research and Development: Suitable for academic institutions or companies engaged in advanced research that necessitates modifying model architecture and training processes.

LLM API:

Startups and Small Businesses: Ideal for startups that need to deploy AI quickly without investing in infrastructure.

Prototype and MVP Development: Great for quickly building and testing prototypes.

Non-Technical Teams: Perfect for teams without extensive machine learning expertise. For instance, a marketing team uses AI to automate content generation.

Making the Right Choice

Consider Open-source LLM:

You need extensive customisation and control

You are handling sensitive data

Your project requires scalability without ongoing costs

You have the technical expertise and resources

Consider LLM API:

You need a quick and easy setup

You require scalable and reliable performance

You are looking for cost-effective solutions

You want access to the latest AI advancements

Conclusion

In the developing era of AI, choosing the right approach to integrating Large Language Models can make all the difference in your project's success. The choice between open-source local LLMs and LLM APIs is not just a technical decision but a strategic one. It reflects your organisation's priorities, capabilities, and vision for the future.

In the end, whether you build your AI foundation brick by brick with local LLMs or ride the wave of innovation with LLM APIs, the future of AI is in your hands. Embrace the journey, explore the possibilities, and let your vision guide you toward the most fitting and transformative AI solution for your needs.

Key Features and Capabilities

1. Technical Aspects:

Open-source LLM:

Hardware Requirements: For complex models, a powerful GPU is essential. Simpler models, however, can run smoothly on a standard desktop or laptop.

Software Setup: Ensure to have Python (as an example), and an API key ready to deploy LLM models on your machine.

LLM API:

Ease of Integration: Developers can integrate these APIs with minimal effort, sending requests and receiving real-time responses. The API provider handles the heavy lifting of model training and infrastructure management.

2. Performance Comparison:

Open-source LLM:

Reduced Latency: A standout benefit is reduced latency. Running the LLM locally eliminates the need to send data to external servers, minimising delays in processing and response times.

LLM API:

Reliable Performance: While some latency may be introduced, these APIs deliver reliable performance. They are designed to handle continuous loads and provide consistent service delivery.

3. Cost Analysis:

Open-source LLM:

Hardware Set up: Operating LLMs locally saves costs and increases performance. However, you might need to update the hardware to provide models with the required power.

LLM API:

Flexible Pricing: Typically offered on a subscription or token-based model, these APIs provide flexible pricing, making them accessible for businesses of all sizes.

4. Privacy Considerations:

Open-source LLM:

Strong privacy: Deploying a local LLM ensures complete control over your data, eliminating the need to send sensitive information to external applications.

LLM API:

Risky Privacy: While privacy can be a concern with third-party providers, reputable companies implement strict security measures to safeguard your data.

Use Cases

Open-source LLM:

Enterprise-Level Projects: Perfect for large organisations that require deep customisation and control over their AI models.

Specialised Applications: Ideal for niche applications where off-the-shelf models might not meet specific requirements. For instance, a healthcare startup developing a unique diagnostic tool based on proprietary medical data.

Research and Development: Suitable for academic institutions or companies engaged in advanced research that necessitates modifying model architecture and training processes.

LLM API:

Startups and Small Businesses: Ideal for startups that need to deploy AI quickly without investing in infrastructure.

Prototype and MVP Development: Great for quickly building and testing prototypes.

Non-Technical Teams: Perfect for teams without extensive machine learning expertise. For instance, a marketing team uses AI to automate content generation.

Making the Right Choice

Consider Open-source LLM:

You need extensive customisation and control

You are handling sensitive data

Your project requires scalability without ongoing costs

You have the technical expertise and resources

Consider LLM API:

You need a quick and easy setup

You require scalable and reliable performance

You are looking for cost-effective solutions

You want access to the latest AI advancements

Conclusion

In the developing era of AI, choosing the right approach to integrating Large Language Models can make all the difference in your project's success. The choice between open-source local LLMs and LLM APIs is not just a technical decision but a strategic one. It reflects your organisation's priorities, capabilities, and vision for the future.

In the end, whether you build your AI foundation brick by brick with local LLMs or ride the wave of innovation with LLM APIs, the future of AI is in your hands. Embrace the journey, explore the possibilities, and let your vision guide you toward the most fitting and transformative AI solution for your needs.

Let's Discuss Your Project

No commitments, just a friendly chat

Copyright © 2024 Froxy Labs - All Rights Reserved.

Let's Discuss Your Project

No commitments, just a friendly chat

Copyright © 2024 Froxy Labs - All Rights Reserved.

Let's Discuss Your Project

No commitments, just a friendly chat

Copyright © 2024 Froxy Labs - All Rights Reserved.

Let's Discuss Your Project

No commitments, just a friendly chat

Copyright © 2024 Froxy Labs - All Rights Reserved.